October 10, 2024

By Sekhar Sarukkai – Cybersecurity@UC Berkeley

In the first blog in our series, “AI Security: Customer Needs And Opportunities,” we provide an overview of the three layers of the AI technology stack and their use cases, along with a summary of AI security challenges and solutions. In this second blog, we address the specific risks associated with Layer 1: Foundational AI and mitigations that can help organizations leverage the many benefits of AI to their business advantage.

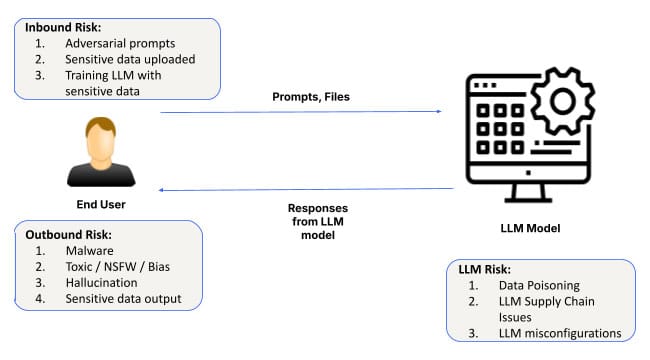

As artificial intelligence (AI) continues to evolve and establish a foothold, its applications span industries ranging from healthcare to finance. At the heart of every AI system are foundational models and software infrastructure to customize those models. This layer provides the groundwork for all AI applications, enabling systems to learn from data, detect patterns, and make predictions. However, despite its importance, the Foundational AI Layer is not without risks. From prompt engineering attacks, to misconfigurations, to data leakage, security challenges at this layer can have far-reaching consequences.

Let’s take a deep dive into the security risks associated with Foundational AI and what businesses need to watch for as they adopt AI-driven solutions.

Security Risks at the Foundational AI Layer

1. Prompt Engineering Attacks

One of the most prevalent risks at the Foundational AI layer is prompt engineering, where attackers manipulate AI inputs to produce unintended or harmful outputs. These attacks exploit vulnerabilities in how AI models interpret and respond to prompts, allowing malicious actors to circumvent security protocols.

New techniques such as SkeletonKey and CrescendoMation have made prompt engineering a growing threat, with various forms of “jailbreaking” AI systems becoming commonplace. These attacks can be found on the dark web, with tools like WormGPT, FraudGPT, and EscapeGPT openly sold to hackers. Once an AI model is compromised, it can generate misleading or dangerous responses, leading to data breaches or system failures.

For example, an attacker could inject prompts into a customer service AI system, bypassing the model’s constraints to access sensitive customer data or manipulate outputs to cause operational disruption.

To mitigate this risk, organizations must implement robust input validation mechanisms and regularly audit AI systems for potential vulnerabilities, especially when prompts are used to control significant business processes.

2. Data Leakage

Data leakage is another significant concern at the Foundational AI layer. When organizations use AI models for tasks like generating insights from sensitive data, there’s a risk that some of this information may be inadvertently exposed during AI interactions. This is especially true in model fine-tuning or during extended conversations with LLMs (Large Language Models).

A notable example occurred when Samsung engineers accidentally leaked sensitive corporate data through interactions with ChatGPT. Such incidents underscore the importance of treating prompt data as non-private by default, as it could easily be incorporated into the AI’s training data. While we see continuing advancements for privacy protections at the foundation model layer, you will still need to opt out to ensure that no prompt data finds its way into the model.

Additionally, historically exploited ways of data exfiltration could be more easily deployed in the context of a long-running conversation with LLMs. Being cognizant of issues like image markdown exploit in Bing chatbot as well as ChatGPT will also need to be addressed, especially with private deployments of LLMs.

Data exfiltration techniques that have historically targeted traditional systems are also being adapted for AI environments. For example, long-running chatbot interactions could be exploited by attackers using tactics like image markdown exploits (as seen in ChatGPT) to stealthily extract sensitive data over time.

Organizations can address this risk by adopting strict data-handling policies and implementing controls to ensure that sensitive information is not inadvertently exposed through AI models.

3. Misconfigured Instances

Misconfigurations are a common source of security vulnerabilities, and this is particularly true at the Foundational AI layer. Given the rapid deployment of AI systems, many organizations struggle to properly configure their AI environments, leaving models exposed to potential threats.

Misconfigured AI instances—such as allowing untrusted third-party plugins, enabling external data integration without proper controls, or granting excessive permissions to AI models—can result in significant data breaches. In shared responsibility models, organizations may also fail to implement necessary restrictions, increasing the risk of unauthorized access or data exfiltration.

For example, ChatGPT allows plugins to integrate with third-party services, which can be a major source of data leaks if untrusted plugins are inadvertently connected to enterprise instances. Similarly, improper access control configurations can lead to unauthorized users gaining access to sensitive data through AI systems.

While red teaming is not a new concept, it is particularly important across all layers of the AI stack since LLMs act as black boxes and their outputs are not predictable. For example, research from Anthropic, showed that LLMs could turn into sleeper agents with attacks hidden in model weights that may surface unexpectedly.

To reduce this risk, organizations should follow best practices for AI configuration by limiting the integration of third-party services, restricting permissions, and regularly reviewing AI environment configurations. Skyhigh Security’s secure use of AI applications solutions provides the tools and best practices to ensure AI systems are properly configured and protected.

Strengthening AI Security at Layer 1

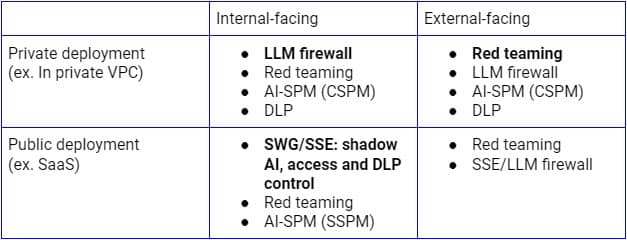

The above security risks need to be addressed based on different use cases. For internal-facing public deployments of LLMs, Secure Service Edge (SSE forward proxy) is the logical platform on which to extend controls to SaaS AI applications since enterprises already use it to discover, control access, and protect data to all SaaS applications and services. On the flip side, for deployments on private infrastructure, an LLM firewall that is a combination of SSE reverse proxy (or via LLM APIs) and AI guardrails will be the right paradigm to achieve Zero Trust security. Independent of the deployment model, continuous discovery, red teaming and configuration audits will also be crucial.

The above figure illustrates a comprehensive architecture for securing LLM use from within the enterprise or trusted users and devices. A similar approach for customers and unmanaged devices will primarily focus on the bottom flow, which includes the reverse proxy only.

Addressing these security risks early in the deployment of Foundational AI is crucial to ensuring the success and security of AI systems. To strengthen AI security at this foundational layer, organizations should:

- Conduct regular audits: Ensure that AI systems are regularly reviewed for prompt injection vulnerabilities, misconfigurations, and data-handling issues.

- Implement input validation: Use robust mechanisms to validate prompts and inputs, reducing the risk of prompt engineering attacks.

- Adopt data protection measures: Establish strong data governance frameworks to minimize the risk of data leakage during AI interactions.

- Secure AI environments: Follow best practices for configuring AI systems, especially when integrating third-party plugins or services.

The Path Forward: Securing AI from the Ground Up

Foundational AI serves as the backbone for many advanced AI applications, but it also introduces significant security risks, including prompt engineering attacks, data leakage, and misconfigurations. As AI continues to play a pivotal role in driving innovation across industries, businesses must prioritize security from the very beginning—starting at this foundational layer.

The next blog in our series will explore the security needs and opportunities associated with Layer 2: AI Copilots, virtual assistants and productivity tools that can help guide decision-making and automate a wide variety of tasks. To learn more about securing your AI applications and mitigating risk, explore Skyhigh AI solutions.